Research

Hearing loss is not the same as lowering the volume on sounds in the world – it is a distortion of sound that leads to increased effort, fatigue, and frustration when trying to understand speech. These all impact a person’s quality of life, ability to function in the workplace, and willingness to socialize. My research addresses the challenge of measuring and describing listening effort in a way that reflects the everyday struggles of people with hearing loss, and which compels clinical assessments to evolve to recognize effort as an integral part of the listening experience. The lab is supported by the NIH Institute on Deafness and Other Communication Disorders (NIDCD) grant R01DC017114 "Listening effort in cochlear implant users"

These are the main areas of work Listening effortCochlear ImplantsSpeech perceptionAcoustic Cue weightingBinaural HearingData visualization |

|

Listening effort

People with hearing impairment need to put in more mental effort for routine conversational listening. As a result, by the end of the day there can be little energy left for socializing, playing with the kids, or other adventures. Audiologists frequently hear stories from patients who once enjoyed fun things like the theater, dining out, game night, church and the comedy club, but who now think it’s simply not worth the hassle. Sometimes people with hearing loss slowly change their lives in ways that they don't even notice, and then years later discover that life has changed dramatically.

Listening effort likely plays a role in the increased prevalence of sick leave, unemployment, and early retirement among people with hearing difficulty.

At the Listen Lab, we aim to measure listening effort involved in speech perception, and look for ways that the imapct of effort might affect people in unexpected ways

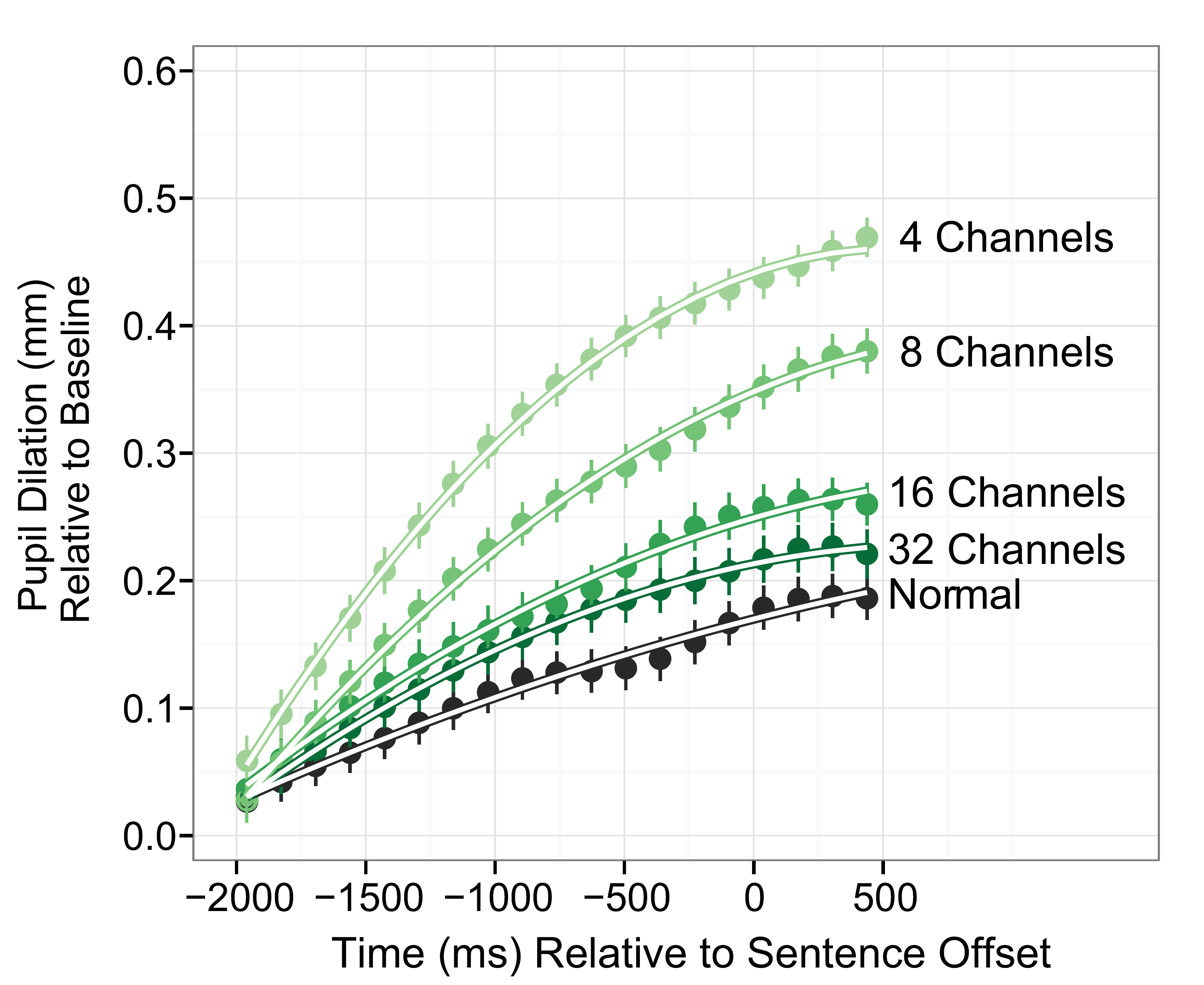

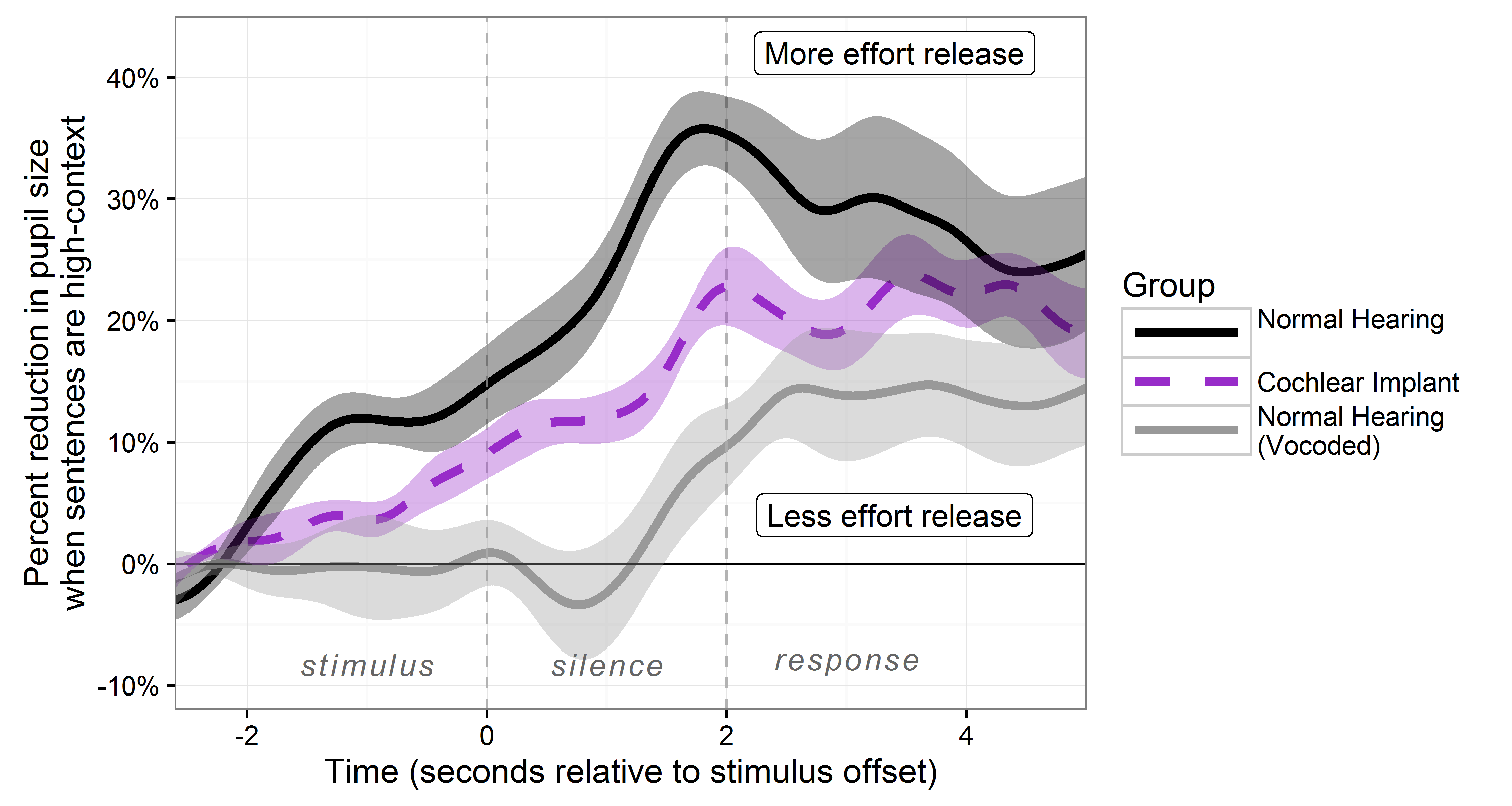

One of the main tools we have for measuring listening effort is measuring pupil dilation. When a person is under more stress and tries harder, the pupils will dilate more. We use that as a measure of how difficult it is to listen to speech.

What affects listening effort?

The acoustic clarity of sentences

Winn, Edwards and Litovsky (2015) showed that degradation in the frequency resolution (clarity) of speech is related to corresponding increases in listening effort. this is important because peopel with cochlear hearing loss and cochlear implants experience frequency degradation.

The presence or absence of contextual cues within a sentence

Winn (2016) found that sentences that have internal coherence (e.g. "The detective searched for a clue") are easier to process than sentences with unpredictable words (e.g. "Nobody heard about the clue"). this is important to demonstrate that we don't listen one-word-at-a-time; we take advantage of the meaning of words to ease the effort of ongoign listening.

Using a cochlear implant

In the same study by Winn (2016), individuals who use cochlear implants showed delayed processing of contextual cues, possibly suggesting slower language processing because of the poor sound clarity. This is important because it's not just about how many words you get correct, but how efficiently you can process the meaning and be sure of what you heard.

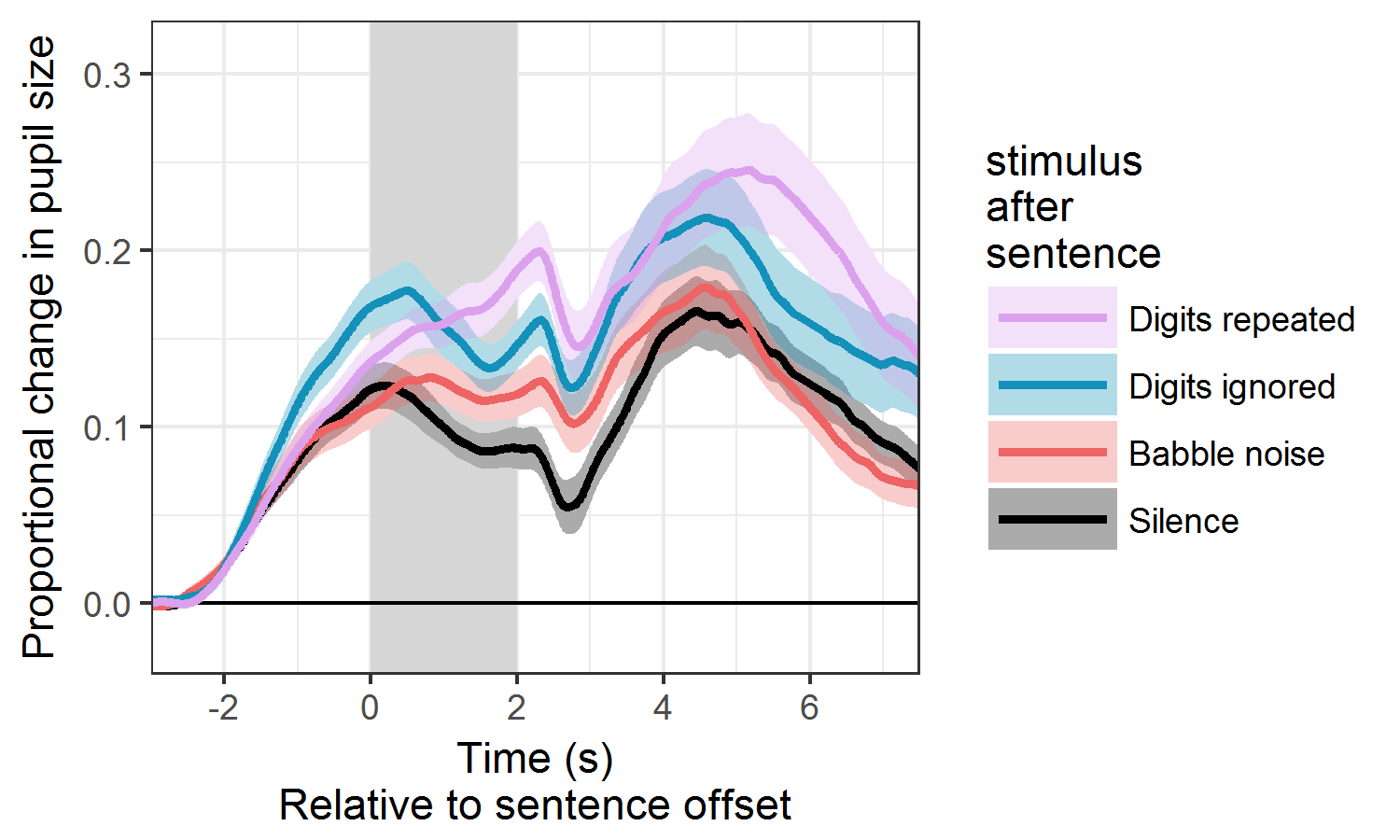

Distracting sound just after a sentence

In a study by Winn and Moore (2018), sentences were heard by listeners with cochlear implants. We suspected that they used an extra moment after the sentence to think back and "repair" words that were misperceived along the way. To test this, we inserted a bit of noise or extra speech after the sentence, and found that this has a negative impact on their ability to use context to reduce listening effort. This is important because in clinical hearing tests, people can use an extra moment to think of the answer and improve theirperformance, but that extra moment might not be available in everyday conversation.

Speaking rate

When speech is slower, listeners with cochlear implants are more likely to experience reduced effort and better processing of contextual cues when speech is spoken more slowly. Slower speaking rate also reduces the need to continue putting effort into a sentence you just heard (Winn & Teece, 2021). This is important because it demonstrates thatdoing what people with hearing loss always ask (speak more slowly) has a real benefit.

Mentally correcting a misperception

When a person repeats a sentence correctly, that doesn't mean they heard the sentence correctly. Sometimes one or two of the words are misperceived, but the brain can work to fill them in using context. This backward mental reflection is effortful (Winn & Teece, 2021). This is important because in clinical tests of hearing, we measure whether a person correctly repeated a sentence, but do not recognize the work it took them to figure out the answer. this study demonstrates that even if the answer is correct, it could have been effortful.

Searching for meaning

Getting a word wrong doesn't necessarily mean that speech understanding is effortful. The problematic situation is when you think you heard the words but can't connect the meaning across them. (Winn & Teece, 2021). This is important because clinical tests of hearing often use single words at a time, but this study demonstrates that it's the connection of meaning across words (not perception of individual words) that leads to big changes in effort.

There are MANY questions that remain to be explored with regard to listening effort.

For example:

How can listening effort measures be adapted for use in an audiology clinic?

How is effort affected by your knowledge of conversation topic?

How is effort affected by the talker's accent and prosody?

How does a person decide when a situation is too effortful to continue trying?

How do lab-based measures of momentary effort relate to a person's experience of listening effort throughout a day and a week?

How do we measure effort efficiently when it's so much easier to measure speech intelligibility?

(return to top)

Cochlear implants

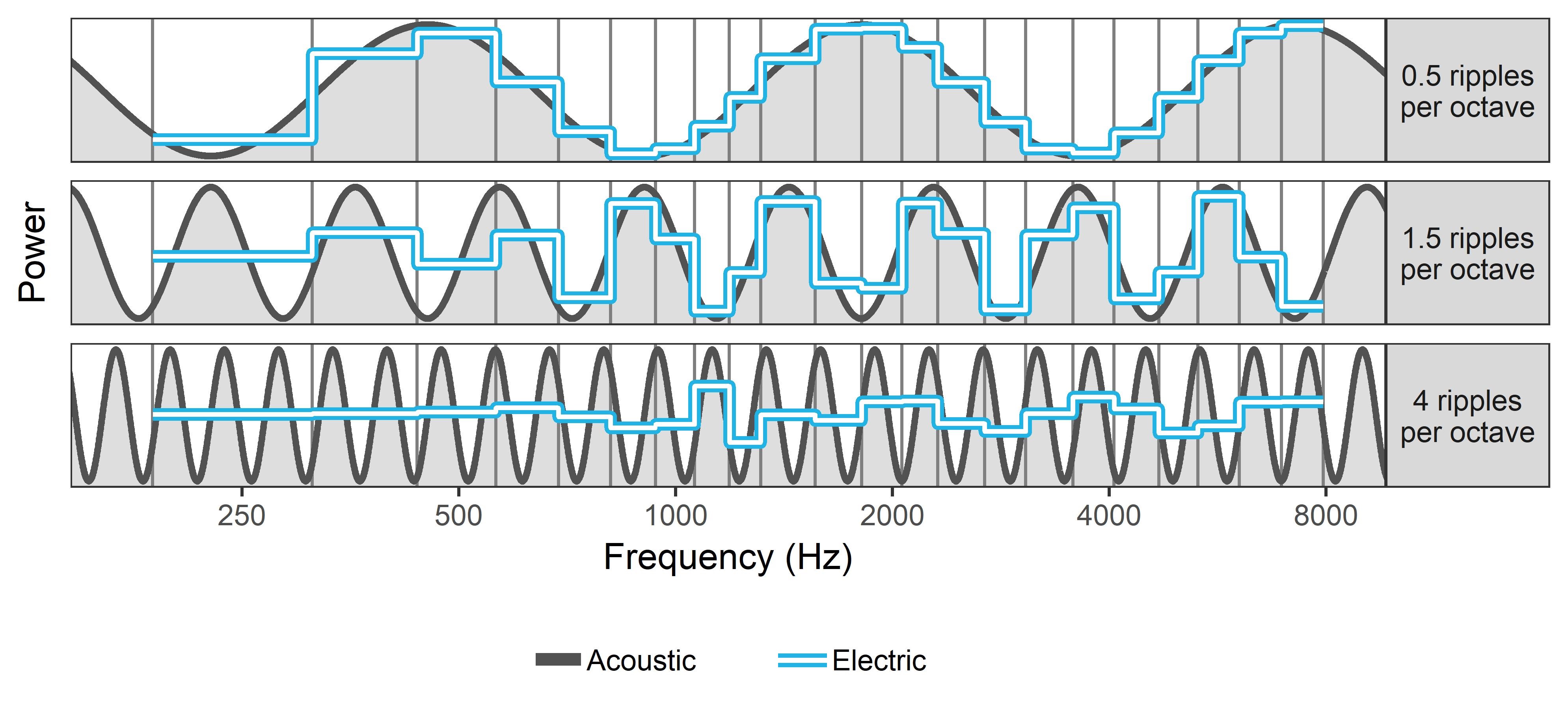

Cochlear implants (CIs) provide a sensation of hearing to people who have severe to profound deafness and who choose to participate in the hearing/speaking community. The microphone and speech processor receives sound through the air and converts the sound information into a sequence of electrical pulses that are used to stimulate the auditory nerve. This is designed to parallel the normal process of hearing, where mechanical movements in the ear are translated into electrical stimulation for the nerves.

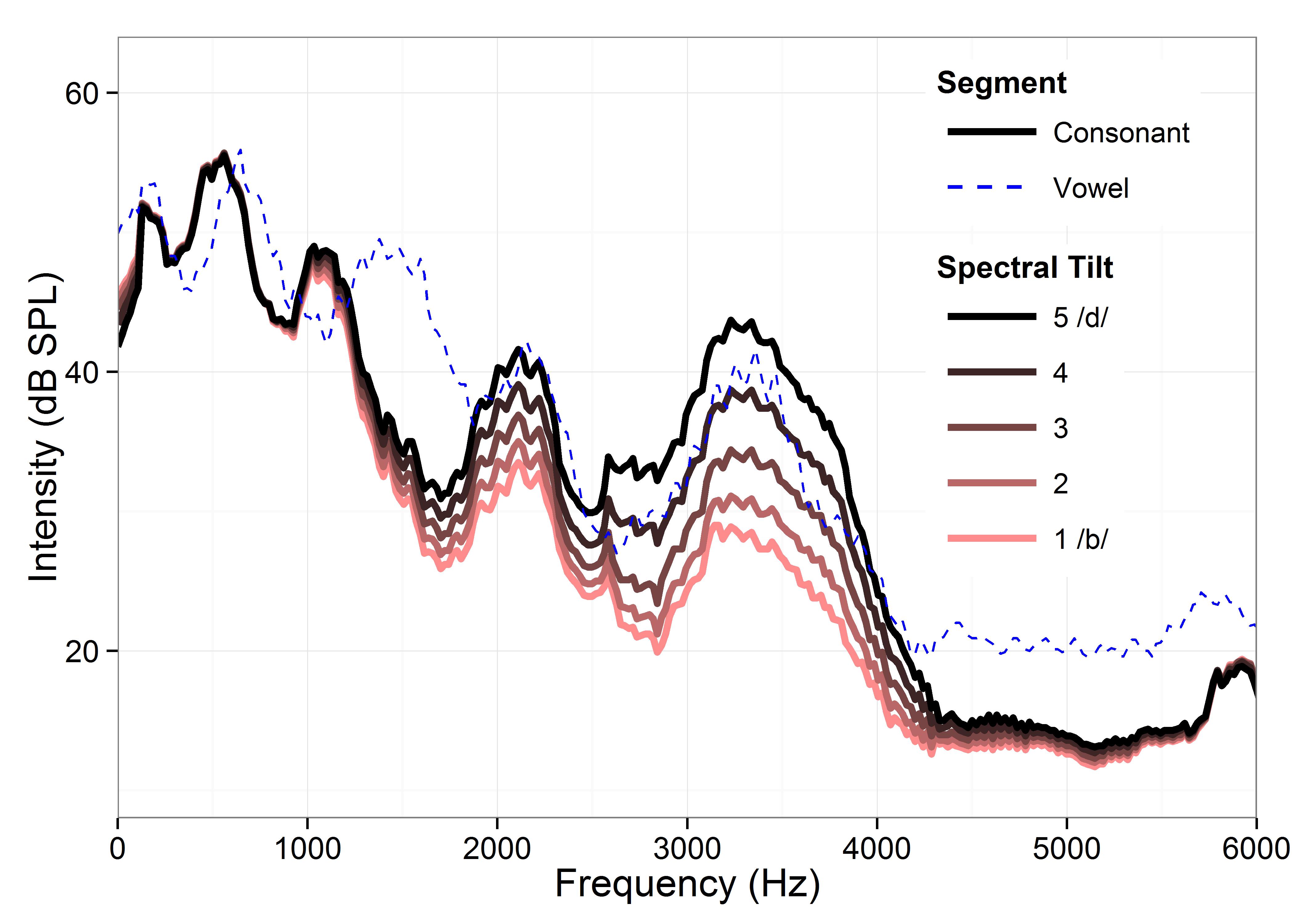

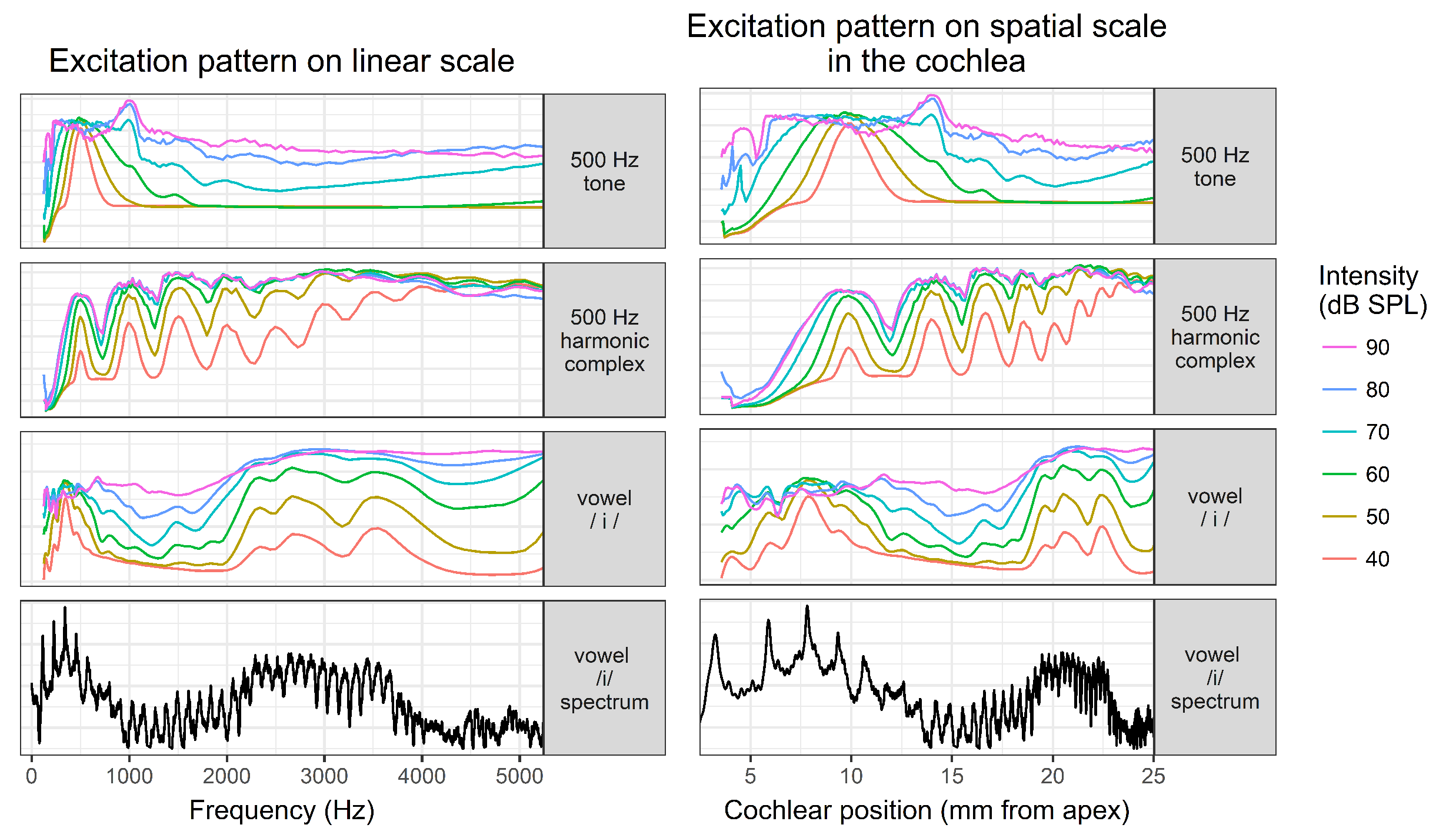

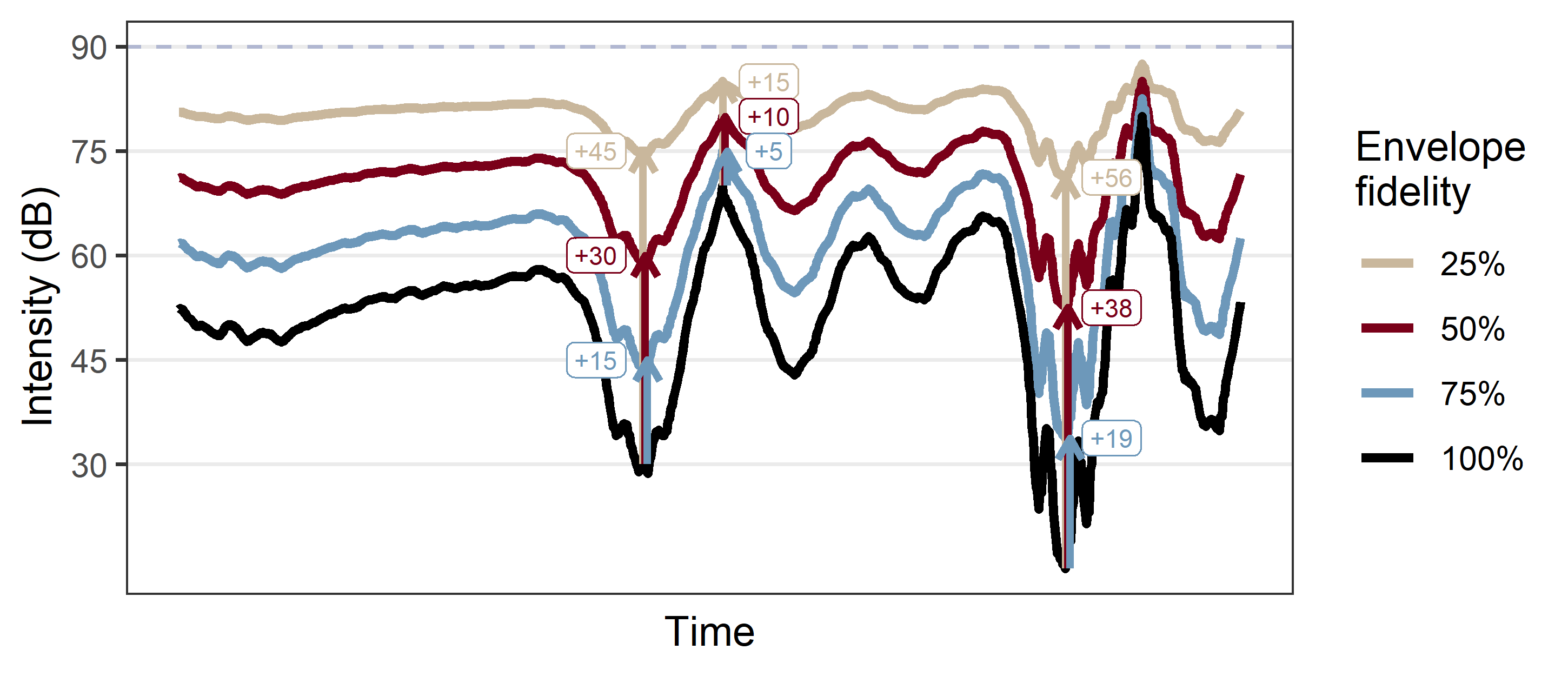

The Listen Lab research on CIs focuses on the representation of spectral (frequency) information, which is known to be severely degraded. We are interested in the perceptual consequences of degraded spectral resolution in terms of success in speech perception, acoustic cue weighting, and the effort required to understand speech.

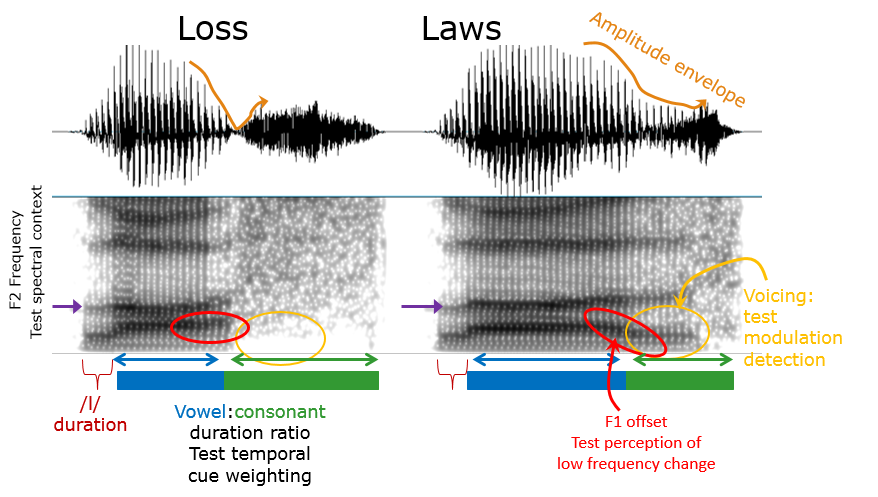

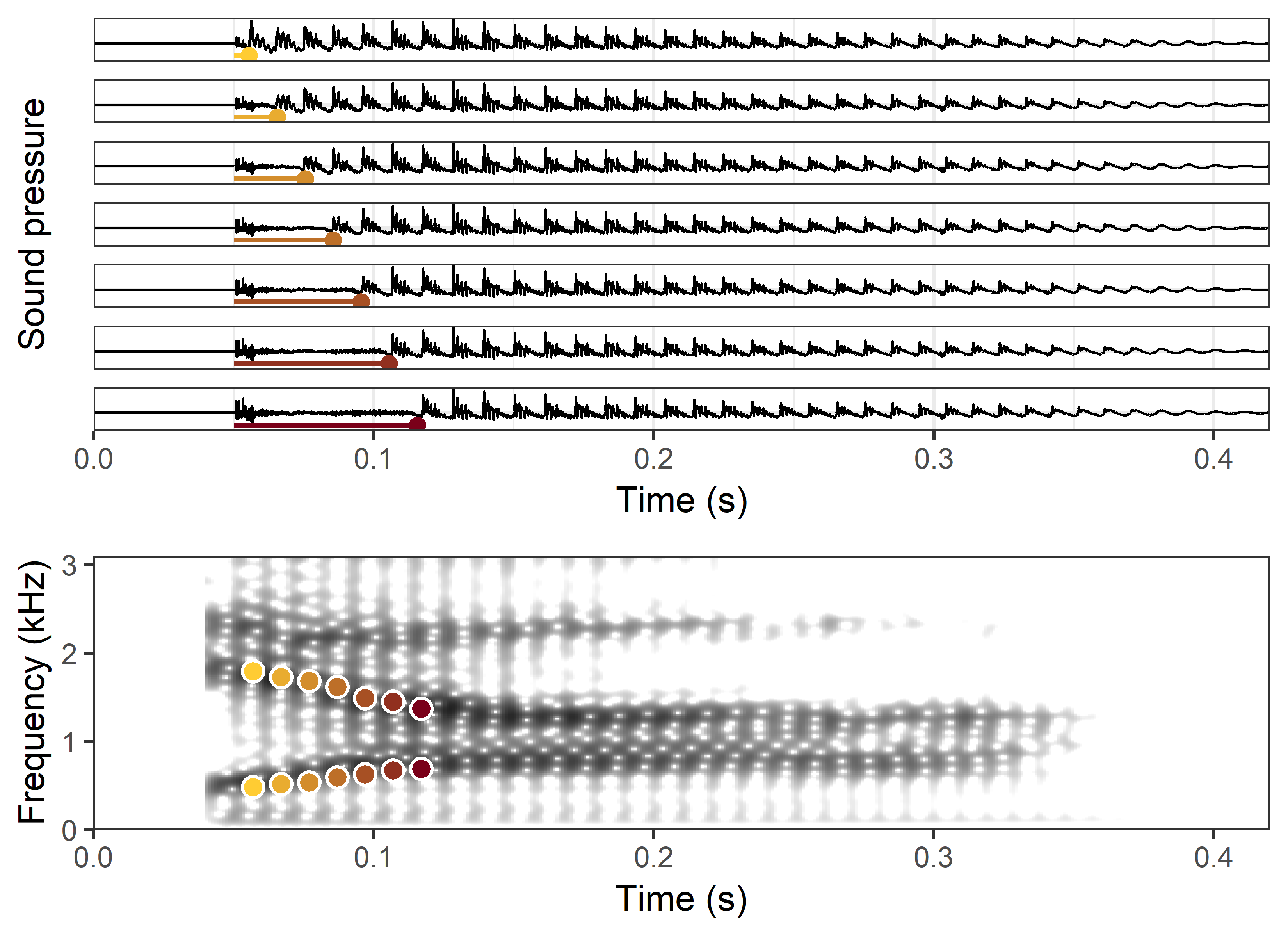

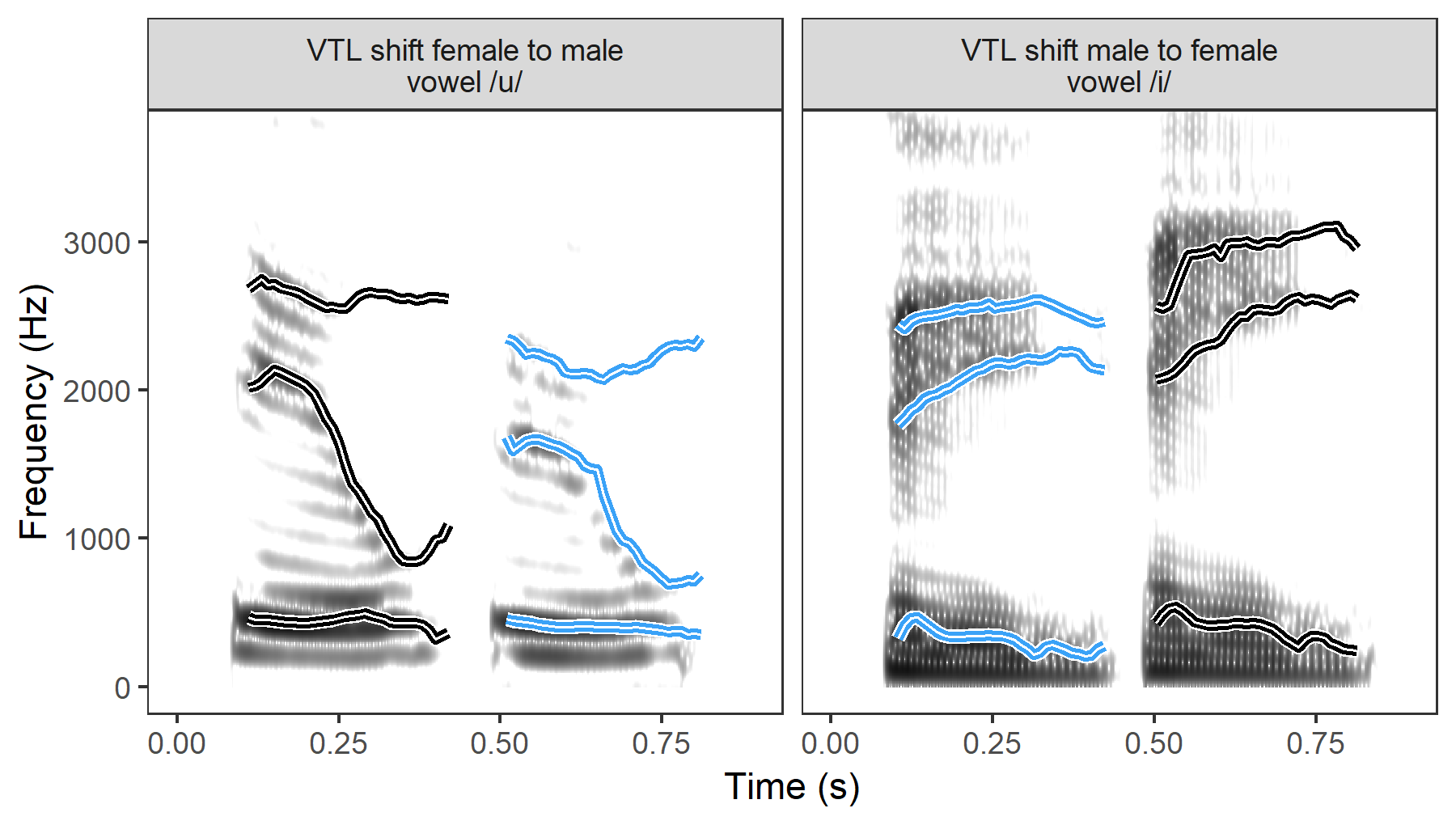

Using speech to learn about the auditory system

A central technique of the lab is to use speech sounds to learn about the auditory system. We are interested in developing ways to create high-quality realistic speech stimuli that are suitable for testing auditory processing.

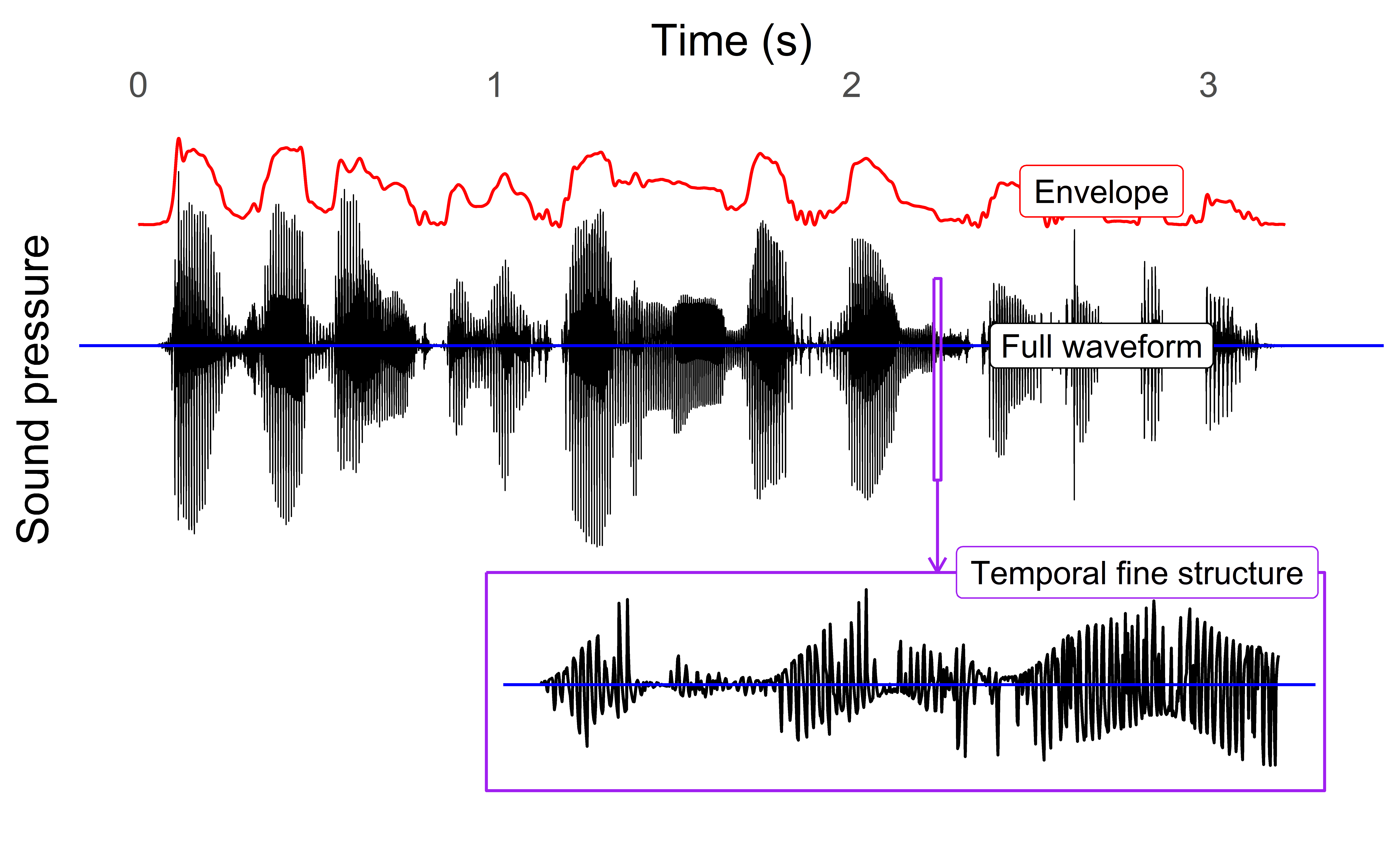

Speech contains a variety of acoustic components that stimulate the auditory system in the spectral, temporal, and spectro-temporal domains. There are so many dimensions to explore! By exploiting these properties of speech, we can learn a lot about the corresponding properties of the auditory system.

Such exploration is typically done with non-speech sounds in psychoacoustic experiments. There is a rich history of psychoacoustics, but a surprising lack of connection to real components of speech sounds. We hope to bridge that gap and, in the process, learn something valuable about how to understand the perception of speech by people with normal hearing and by people with hearing impairment

For any particular acoustic cue that you’re interested in, there is usually at least one speech contrast that depends on that cue.

Together with Christian Stip (University of Louisville), Matthew Winn has authored a chapter in the Routledge Handbook of Phonetics that introduces some ways in which thinking about the auditory system and speech acoustics at the same time can lead to a richer understanding of each.

(return to top)

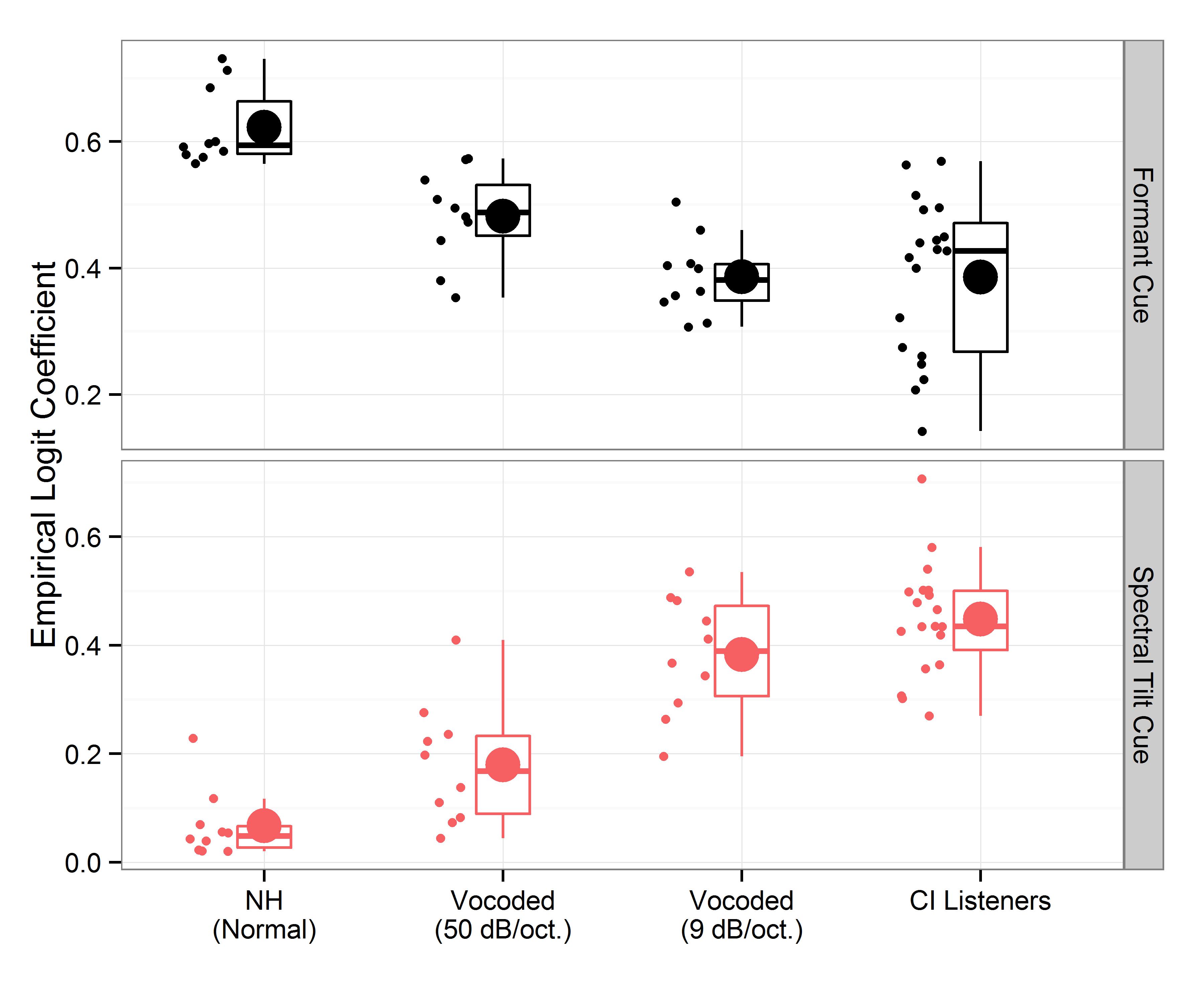

Acoustic cue weighting

With so many aspects of speech changing at the same time, people have multiple options for different “strategies” to identify speech sounds.

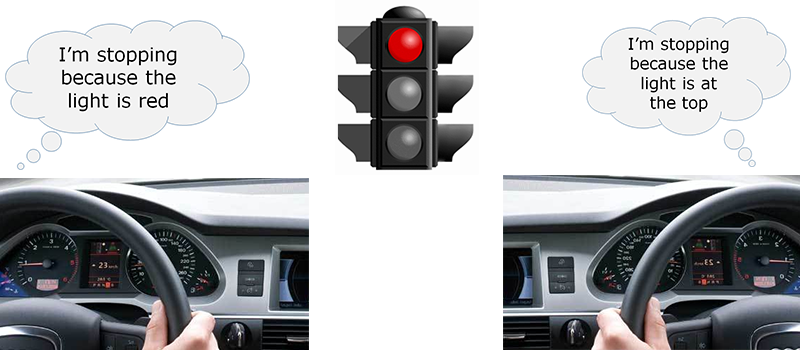

A good analogy to understand cue weighting is the different cues at a traffic light.

These people can use different strategies to obtain the same exact information – that it’s okay go, or it’s time to stop. Other cues include seeing other cars move around you, or hearing blaring horns from cars behind you (but not in the Midwest!)

The Listen Lab studies how multiple cues in speech sounds can be decoded by people who try to identify the speech. This is particularly important for understanding hearing impairment, which can force some people to tune in to cues that are different than the ones used by people with normal hearing.

Some of our previous work suggests that listeners increase reliance upon temporal cues when frequency resolution is degraded. This has particular implications for people who use cochlear implants, because they are known to experience especially poor frequency resolution.

Ongoing work explores how cue weighting is connected with listening effort.

(return to top)

Binaural Hearing

Binaural hearing refers to the coordination of both ears to learn about sounds in the environment. It's more than just hearing on the left and right; it's the ability to know where sounds are coming from, and to distinguish one sound from a background of noise. Binaural hearing relies on some of the fastest most precise coding in the brain to be successful.

At the Listen Lab, we have explored binaural hearing sensitivity using high-speed eye-tracking methods that demonstrate the speed, certainty and reliability of our judgments of sound cues. We hope to use this paradigm to test basic psychoacoustic abilities, especially in cases where it is difficult to test (e.g. in children), difficult to coordinate the two ears (e.g. hearing with cochlear implants), or in cases where the binaural system might have been damaged by traumatic brain injury or blast exposure.

(return to top)

Statistical modeling and data visualization

Statistical modeling is an essential part of any research. In the lab, we are enthusiastic about finding the most effective ways to describe data and the behavior that leads up to it.

Thoughtful data visualization is an effective way to help others understand your research. I strive to find ways to visualize data in ways that help to reveal unexpected patterns, or to convey information in ways that facilitate learning.

To create visualizations, I prefer to use the ggplot2 package in the R programming environment.

You can find a fun new visualization of Marvel's Avengers character scripts here

You can also view some interesting visualizations of vowel acoustics here

(return to top)